Sunday, October 21, 2007

Max Column Length

DECLARE ColumnList CURSOR

FOR

SELECT c.[name] AS ColumnName

FROM sys.Columns c

JOIN sys.Tables t

ON c.Object_ID = t.Object_ID

WHERE t.Name = 'reyAASerWIP'

DECLARE @ColumnName varchar(30)

OPEN ColumnList

FETCH NEXT FROM ColumnList INTO @ColumnName

WHILE @@FETCH_STATUS = 0

BEGIN

PRINT @ColumnName

EXECUTE('SELECT MAX(LEN(RTRIM(' + @ColumnName+ '))) AS ' + @ColumnName + ' FROM reyAASerWIP ')

FETCH NEXT FROM ColumnList INTO @ColumnName

END

CLOSE ColumnList

DEALLOCATE ColumnList

Sunday, October 14, 2007

Importing CSVs with SSIS

The CSV import process is much more difficult with SSIS than it was for DTS. Scanning the MS newsgroups it looks like many of the problems will be corrected in SQL Server 2008. That's a long ways off, so let's look at some of the issues and how we can solve them.

Our CSV looks like this:

1- Name, Address, PhoneNumber, InvoiceAmount

2- "Fred Flintstone", "365 Stone Ave, BedRock, StoneAge", "928-555-1212", "25.00"

3- "Barney Rubble, "152 Granite Street, BedRock, StoneAge", "928.555.6326"

4- "Mister Slate", "52 Diamond Road", BedRock, StoneAge", "928.555.5332", "14.00"

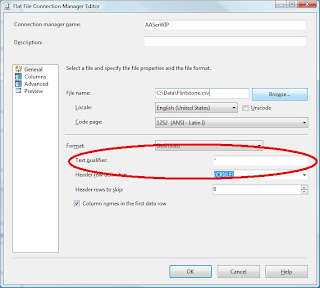

The first problem is that there are commas enclosed by quotation marks in the Address field. SSIS will put BedRock as the PhoneNumber and StoneAge as the InvoiceAmount. This is very easy to solve. In the properties window of the Flat File Connection Manager, you will see a textbox labeled "Text qualifier." Just put a quotation mark in that field and it will see that the address column contains commas.

The next issue is that record #3 does not have the appropriate number of columns. It is one column short. DTS would handle this by inserting NULLs in the missing fields and then moving on to the next record. SSIS chokes on this. It will attempt to put "Mister Slate" in the InvoiceAmount field of record #3.

To solve this issue, I wrote the following script. I had to write it in VB.NET, since SSIS does not allow C# quite yet.

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.IO

Imports System.Text

Public Class ScriptMain

Public Sub Main()

Dim myLineCount As Int32 = 0

Dim myFilePath As String = Dts.Connections("FlintstoneCSV").ConnectionString

Dim myNewFilePath As String = Dts.Connections("NewFlintstoneCSV").ConnectionString

Dim myNewFile As FileInfo = New FileInfo(myNewFilePath)

Dim myNewFileStream As FileStream = myNewFile.Create()

Dim myFileStream As FileStream = New FileStream(myFilePath, FileMode.OpenOrCreate, FileAccess.ReadWrite, FileShare.ReadWrite)

Dim myStreamReader As New StreamReader(myFileStream)

Dim myStreamWriter As StreamWriter = New StreamWriter(myNewFileStream, Encoding.ASCII)

Dim myLine As String

Try

myLine = myStreamReader.ReadLine

While Not myStreamReader.EndOfStream

Dim myOffSet As Int32 = 1

Dim myBeginIndex As Int32

Dim myEndIndex As Int32

Dim myCount As Int32 = 0

Dim myColumnCount As Int32 = 41

Dim myCommaCount As Int32 = 0

Dim myChar As Char

'myLineCount += 1

'Dts.Events.FireInformation(0, "Cleaning CSV", "Processing Line " + myLineCount.ToString(), "", 0, True)

Dim myLineChars As Char() = myLine.ToCharArray()

While myCount <= myLineChars.Length - 1 If myLineChars(myCount) = """" Then myCount = InStr(myCount + 2, myLine, """") Else If myLineChars(myCount) = Convert.ToChar(",") Then myCommaCount += 1 End If myCount += 1 End If End While Dim myCommas As String = "" If myColumnCount - myCommaCount > 0 Then

myCommas = New String(Convert.ToChar(","), myColumnCount - myCommaCount)

End If

myStreamWriter.WriteLine(myLine.Replace(vbCrLf, "") + myCommas)

myLine = myStreamReader.ReadLine

End While

Dts.TaskResult = Dts.Results.Success

Catch ex As Exception

Dts.Events.FireError(0, "Cleaning CSV", "Encountered Error " + ex.Message _

+ " While Processing Line " + myLine, "", 0)

Dts.TaskResult = Dts.Results.Failure

Finally

myStreamReader.Close()

myStreamReader.Dispose()

myStreamWriter.Flush()

myStreamWriter.Close()

myFileStream.Close()

End Try

End Sub

End Class

Originally, I put a call to DTS.Events.FireInformational to tell us how many records have completed processing. It turns out that calls to DTS.Events are very, very slow. I removed those lines and processing was much, much faster.

I recently imported a CSV file with very dirty data. It included fields that will stretch across multiple rows and fields with quotes in the data. After struggling with SSIS, I finally decided to write my own CSV importer. As I was beginning my project and doing CSV research, I stumbled upon this project, called "A Fast CSV Reader."

http://www.codeproject.com/cs/database/CsvReader.asp

I used this code, along with a SQLBulkCopy object from DotNet 2.0. It imported 9 million large rows of dirty data in just a few minutes. I was amazed. What cleaning it didn't do, I will do in a SPROC.

Here is the extent of the code I wrote myself:

using (LumenWorks.Framework.IO.Csv.CsvReader

myCSVReader = new LumenWorks.Framework.IO.Csv.CsvReader(

new StreamReader("c:\\Data\\Flintstone.csv", Encoding.ASCII), true,

Convert.ToChar(",")

, Convert.ToChar("\""), Convert.ToChar("\\"), Convert.ToChar("#"), true))

{

myCSVReader.DefaultParseErrorAction = ParseErrorAction.ThrowException;

myCSVReader.MissingFieldAction = MissingFieldAction.ReplaceByEmpty;

myCSVReader.SkipEmptyLines = true;

myCSVReader.SupportsMultiline = true;

using (SqlBulkCopy myBulkCopy = new SqlBulkCopy(SqlHelper.ConnectionString))

{

myBulkCopy.DestinationTableName = "Destination";

myBulkCopy.BulkCopyTimeout = 30;

myBulkCopy.BatchSize = 10000;

myBulkCopy.WriteToServer(myCSVReader);

}

}

MessageBox.Show("Import Success");